- There will be a new language with broken lexical scope (remember, it's a criterion for success).

- A GWT-like, 80's style, object-oriented GUI framework will make Dart a major player in web apps.

Saturday, December 31, 2011

PL Predictions for 2012

Wednesday, December 21, 2011

Call-by-push-value

Looking back at predictions for 2011

▶ As more people use JSON, we'll see a XML renaissance, as we - for the first time - discover that XML actually gets some things right.

Didn't really happen, AFAICS. But there's this post: Why XML won’t die: XML vs. JSON for your API which says: "not everything in the world is a programming-language object". +1 to that.

▶ GHC will get typed type-level programming and end this highly embarrassing state of untypedness.

▶ We'll have a verified compiler-and-OS toolchain for compiling and running some kinds of apps. It won't be x86-based.

▶ All kinds of stuff targetting JavaScript.

▶ Split stacks and maybe some scheduler improvements will be shown competitive with green threads in the millions-of-threads!!1! (anti-)scenario.

Nobody did this AFAIK, but I still think it's true. Maybe next year.

Monday, December 19, 2011

what distinguishes a good program from a bad one

One might even say that the ability to generalize behavior of specific tests to the behavior of the program is precisely what distinguishes a good program from a bad one. A bad program is filled with many, many different cases, all of which must be tested individually in order to achieve assurance.(HT @dyokomizo)

Tuesday, December 13, 2011

Saturday, November 26, 2011

The extensible way of thinking

Aucbvax.5036

fa.editor-p

utzoo!decvax!ucbvax!editor-people

Mon Nov 9 17:17:40 1981

>From RMS@MIT-AI Mon Nov 9 16:43:23 1981

Union types do not suffice for writing MEMBER.

They may be appear to be sufficient, if you look at a single program rather than at changing the program. But MEMBER is supposed to work on any type which does or EVER WILL exist. It should not be necessary to alter the definition of MEMBER and 69 other functions, or even to recompile them, every time a new type is added.

The only way to avoid this is if the system allows new alternatives to be added to a previously defined union type. And this must have the effect of causing all variables, functions and structures previously declared using the union type to accept the new alternatives, without the user's having to know what variables, functions or structures they are. Then you can define a union type called EVERYTHING and add all new types to that union. But then the system should save you trouble and automatically add all new types to the union EVERYTHING. This is tantamount to permitting you not to declare the type.

External variables are vital for extensible systems. A demonstration of this is in my EMACS paper, AI memo 519a.

Beliefs such as that type declarations are always desirable, and that external variables should be replaced by explicit parameters, and that it is good to control where a function can be called from, are based on a static way of looking at a program. There is only one program being considered, and the goal is to express that one program in the clearest possible manner, making all errors (in other words, all changes!) as difficult as possible. Naturally, mechanisms that incorporate redundancy are used for this.

The extensible way of thinking says that we want to make it as easy as possible to get to as many different useful variations as possible. It is not one program that we want, but options on many programs. Taking this as a goal, one reaches very different conclusions about what is good in a programming language. It looks less like ADA and more like Lisp.

It is no coincidence that "computer scientists" have been using the static view in designing their languages. The research has been sponsored for the sake of military projects for which that viewpoint may be appropriate. But it is not appropriate for other things. An editor designed to be reliable enough to be "man-rated" is a waste of effort; and if this unnecessary feature interferes with extensibility, it is actually harmful.

Friday, November 11, 2011

Happy Birthday Go

The Go Programming Language turns two.

I really want to like Go (Commander Pike is one of my heroes, and systems programming currently either means C or a Special Olympics scripting language). But I'm slightly put off by the lack of exceptions, and the seemingly gratuitous concepts offered instead. Does anyone have experience with how this aspect of Go pans out in real world use?

Thursday, November 10, 2011

Is JavaScript a Lisp in disguise?

Complex Lambda lists (optional, keyword, rest parameters): NO

THE HALLMARKS OF LISP HAVE ALWAYS BEEN EXTREME FLEXIBILITY AND EXTENSIBILITY, AND A MINIMUM OF NONSENSE THAT GETS IN YOUR WAY.*

Wednesday, November 9, 2011

Linkdump

▶ Nested Refinements: A Logic For Duck Typing (via @swannodette)

Programs written in dynamic languages make heavy use of features — run-time type tests, value-indexed dictionaries, polymorphism, and higher-order functions — that are beyond the reach of type systems that employ either purely syntactic or purely semantic reasoning. We present a core calculus, System D, that merges these two modes of reasoning into a single powerful mechanism of nested re- finement types wherein the typing relation is itself a predicate in the refinement logic. System D coordinates SMT-based logical implication and syntactic subtyping to automatically typecheck sophisticated dynamic language programs. By coupling nested refinements with McCarthy’s theory of finite maps, System D can precisely reason about the interaction of higher-order functions, polymorphism, and dictionaries. The addition of type predicates to the refinement logic creates a circularity that leads to unique technical challenges in the metatheory, which we solve with a novel stratification approach that we use to prove the soundness of System D.(See also The Shadow Superpower: the $10 trillion global black market is the world's fastest growing economy: "System D is a slang phrase pirated from French-speaking Africa and the Caribbean. The French have a word that they often use to describe particularly effective and motivated people. They call them débrouillards. To say a man is a débrouillard is to tell people how resourceful and ingenious he is.")

▶ I'm a big fan of the tagless-final approach! (This comment caught my interest, because it reminds me of Kernel: "everything in the language has to become both directly interpretable and available as an AST".)

▶ New extension to Haskell -- data types and polymorphism at the type level (Ωmega-like.)

▶ Evolution of DDD: CQRS and Event Sourcing (@dyokomizo hyped this weird-sounding stuff in response to Beating the CAP theorem).

Tuesday, November 8, 2011

Open, extensible composition models

The evaluator corrects several semantic inadequacies of LISP (described by Stoyan [5]) and is metacircular (written in the language it evaluates). The language provides:

- lists and atomic values, including symbols

- primitive functions called SUBRs

- symbolic functions (closures) called EXPRs

- a FIXED object that encapsulates another applicable value and prevents argument evaluation

- predicates to discriminate between the above types

- built-in SUBRs to access the contents of these values

- a way to call the primitive behaviour of a SUBR

- a quotation mechanism to prevent evaluation of literals

(my emphasis)

Saturday, October 29, 2011

They said it couldn’t be done, and he did it

Before C, there was far more hardware diversity than we see in the industry today. Computers proudly sported not just deliciously different and offbeat instruction sets, but varied wildly in almost everything, right down to even things as fundamental as character bit widths (8 bits per byte doesn’t suit you? how about 9? or 7? or how about sometimes 6 and sometimes 12?) and memory addressing (don’t like 16-bit pointers? how about 18-bit pointers, and oh by the way those aren’t pointers to bytes, they’re pointers to words?).

There was no such thing as a general-purpose program that was both portable across a variety of hardware and also efficient enough to compete with custom code written for just that hardware. Fortran did okay for array-oriented number-crunching code, but nobody could do it for general-purpose code such as what you’d use to build just about anything down to, oh, say, an operating system.

So this young upstart whippersnapper comes along and decides to try to specify a language that will let people write programs that are: (a) high-level, with structures and functions; (b) portable to just about any kind of hardware; and (c) efficient on that hardware so that they’re competitive with handcrafted nonportable custom assembler code on that hardware. A high-level, portable, efficient systems programming language.

How silly. Everyone knew it couldn’t be done.

C is a poster child for why it’s essential to keep those people who know a thing can’t be done from bothering the people who are doing it. (And keep them out of the way while the same inventors, being anything but lazy and always in search of new problems to conquer, go on to use the world’s first portable and efficient programming language to build the world’s first portable operating system, not knowing that was impossible too.)

Thanks, Dennis.

Monday, October 24, 2011

Toolstrapping is everywhere - can you see it?

Thursday, October 20, 2011

It's called programming

People get annoyed when they must write two lines of code instead of one, but I don't. It's called programming. – Rob Pike

Thursday, October 13, 2011

How to beat the CAP theorem

- make all your data immutable

- index snapshots of your data using "purely functional" batch computing (e.g. MapReduce)

- index the realtime data arriving after the last snapshot using an incremental computing system

- for querying, merge the index of the last batch job with that of the incremental system

Since the realtime layer only compensates for the last few hours of data, everything the realtime layer computes is eventually overridden by the batch layer. So if you make a mistake or something goes wrong in the realtime layer, the batch layer will correct it. All that complexity is transient.

Tuesday, October 11, 2011

Programming Language Checklist

Thursday, September 29, 2011

Alan Kay on type systems

I'm not against types, but I don't know of any type systems that aren't a complete pain, so I still like dynamic typing.(HT Paul Snively)

Monday, September 19, 2011

Delimited continuations papers 2

A monadic framework for delimited continuations: Delimited continuations are more expressive than traditional abortive continuations and they apparently seem to require a framework beyond traditional continuation-passing style (CPS). We show that this is not the case: standard CPS is sufficient to explain the common control operators for delimited continuations. We demonstrate this fact and present an implementation as a Scheme library. We then investigate a typed account of delimited continuations that makes explicit where control effects can occur. This results in a monadic framework for typed and encapsulated delimited continuations which we design and implement as a Haskell library.

Thursday, September 8, 2011

The Kernel Underground

- SINK, by John Shutt. Scheme.

- klisp, by Andres Navarro. C.

- js-vau and vau.rkt by Tony Garnock-Jones. JavaScript and Racket.

- Hummus, Cletus, and Pumice, by Alex Suraci. Haskell, Atomy, and RPython.

- Schampignon, Virtua, and Wat by me. JavaScript.

- Klink, by Tom Breton. C.

- Karyon, by Jordan Danford. Python.

- εlispon, by Pablo Rauzy. C.

- fexpress, by Ross Angle. JavaScript.

- Qoppa, by Keegan McAllister. Scheme.

- Schrodinger Lisp, by Logan Kearsley. Python.

- squim, by Mariano Guerra. JavaScript.

- Nulan, by Pauan. Python.

- IronKernel, by Ademar Gonzalez. F#.

- Bronze Age Lisp, by Oto Havle. Assembly.

- Pywat, by Piotr Kuchta. Python.

- Wat.pm, by unknown. Perl.

- Extensible Clojure

Wednesday, September 7, 2011

Monday, September 5, 2011

Thursday, September 1, 2011

Mortal combat

The scheme community is now very invested in its macrology; they got there by long hard work and emotional processing and yelling and screaming and weeping and gnashing of teeth, and they still remember the pain of not having a standard macrology. You will not pry it away except from their cold dead fingers, and you will not redefine it without defeating them in mortal combat.I think the commenter misunderstood my earlier post. Syntactic extension is absolutely necessary, and modern Scheme macro systems are a fine way to do it.

On macros

It has been my impression that, no matter what specific thing you use macros to do, you can later invent a neat language feature that handles the problem in a clean and nice manner. The problem is the "later". Macros can do it "now"... in an ugly, brutish manner, true, but the job gets done. ...

Even among people who find macros smelly, you'll find many who think macros the least distasteful of these choices.John Shutt's response ties this in with fexprs - instead of delegating the job to a separate preprocessing step, let the language itself have the power of self-extension:

Macros are an unpleasant feature to compensate, somewhat, for limitations of a language. It's better to compensate for the limitations than to leave the limitations in place without compensating at all. Better still, though, would be to eliminate the offending limitations. (Remove the weaknesses and restrictions that make additional features appear necessary.) Make no mistake, fexprs don't necessarily increase the uniform flexibility of the language; but with care in the design they can do so, and thereby, the jobs otherwise done by macros are brought within the purview of the elegant language core. So instead of an ugly brutish fix with macros now and hope for a more elegant replacement later, one can produce a clean solution now with fexprs.PS: This point is subtle. After all Scheme is already self-extensible. But Scheme macros still are an additional "layer" on top of, and separate from, the core language. Kernel's fexprs are not - they are the core language, thus bringing self-extension within the purview of the elegant language core.

Sunday, August 28, 2011

Thursday, August 25, 2011

SRII 2011 - Keynote Talk by Alan Kay

"The internet is practically the only real object-oriented system on the planet." (~30:00)

SRII 2011 - Keynote Talk by Alan Kay - President, Viewpoints Research Institute from SRii GLOBAL CONFERENCE 2011 on Vimeo.

Also, the notion of reinventing the flat tire can't get enough airtime.

Tuesday, August 23, 2011

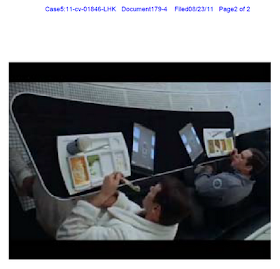

Totally awesome: FOSS Patents: Samsung cites "2001" movie as prior art against iPad patent

Attached hereto as Exhibit D is a true and correct copy of a still image taken from Stanley Kubrick's 1968 film "2001: A Space Odyssey." In a clip from that film lasting about one minute, two astronauts are eating and at the same time using personal tablet computers. The clip can be downloaded online at http://www.youtube.com/watch?v=JQ8pQVDyaLo. As with the design claimed by the D’889 Patent, the tablet disclosed in the clip has an overall rectangular shape with a dominant display screen, narrow borders, a predominately flat front surface, a flat back surface (which is evident because the tablets are lying flat on the table's surface), and a thin form factor.

Sunday, August 21, 2011

Software is a branch of movies

"Virtual" does not mean, as many think, three-dimensional. It is the opposite of *real*.

Virtuality therefore means the *apparent structure of things*

Engineers generally deal with constructing reality. Movie-makers and software designers deal with constructing virtuality.

Software is a branch of movies.

Movies enact events on a screen that affect the heart and mind of a viewer. Software enacts events on a screen which affect the heart and mind of a user, AND INTERACT.

Saturday, August 20, 2011

Praising Kernel

Friday, August 19, 2011

Virtua subjotting

The Virtua programming language

- combined object lambda architecture

- delimited continuations

- fexprs

- plus, of course, all the good stuff we're used to from Lisp: sane syntax, numerical tower, ...

Thursday, August 18, 2011

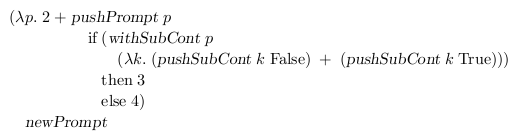

Notes on delimited continuations

- newPrompt --- creates a fresh prompt, distinct from all other prompts. It's just an object that we use as an identifier, basically. Some systems simply use symbols for prompts.

- pushPrompt p e --- pushes prompt p on the stack, and executes expression e in this new context. This delimits the stack, so we can later capture a delimited continuation up to this part of the stack.

- withSubCont p f --- aborts (unwinds the stack) up to and including the prompt p, and calls the function f with a single argument k representing the delimited continuation from the call to withSubCont up to but not including the prompt. This captures a delimited continuation, analogous to how call/cc captures an undelimited continuation.

- pushSubCont k e --- pushes the delimited continuation k on the stack, and executes expression e in this new context. This composes the stack of k with the current stack, analogous to how calling the function representing a continuation in Scheme swaps the current stack with the stack of that continuation.

A little Scheme interpreter

Going co-nuclear

The meme set carried by the [memetic] organism includes a mass of theories, some of which contradict each other. At the center of this mass is a nucleus of theories that are supposed to be believed (part of what Kuhn called a paradigm). Surrounding the nucleus are theories that are meant to be contrasted with the paradigm and rejected, together with memes about how to conduct the contrast; one might call this surrounding material the co-nucleus.

Sunday, August 7, 2011

Intellectual badass Sunday reading

"Nerd? We prefer the term intellectual badass." – UnknownAMD Fusion Developer Summit Keynotes, about AMD's upcoming "Cray on a Chip". Basically, they'll make the parallel processors (formerly known as GPUs) coherent with the brawny sequential CPUs, allowing you to "switch the compute" between sequential and parallel, while operating on the same data (e.g. fill an array sequentially, then switch to parallel processing to crunch the data, all in the same program - and memory space).

[racket] design and use of continuation barriers, by Matthew Flatt. "Use a continuation barrier when calling unknown code and when it's too painful to contemplate multiple instantiations of the continuation leading to the call."

Tutorial: Metacompilers Part 1, about META II, the one Alan Kay and the other VPRI folks always talk about. "You won't really find metacompilers like META II in compiler textbooks as they are primarily concerned with slaying the dragons of the 1960s using 1970s formal theory."

Microsoft and the Bavarian Illuminati: "In the Object Linking and Embedding 2.0 Programmer's Reference there is a very curious term. On page 78, the second paragraph starts with the sentence, 'In the aggregation model, this internal communication is achieved through coordination with a special instance of IUnknown interface known as the /controlling unknown/ of the aggregate.'"

Saturday, August 6, 2011

Sunday, July 31, 2011

The meaning of forms in Lisp and Kernel

($define! $lambda

($vau (formals . body) env

(wrap (eval (list* $vau formals #ignore body)

env))))Through the power of fexprs, Kernel needs only three built-in operators (define, vau, and if) as well as some built-in functions. This is vastly simpler than any definition of Scheme! Scheme built-ins such as lambda, set! (!), define-syntax and others fall directly out of fexprs, for free, radically simplifying the implementation. But I'm rambling.Some history

This [not properly tail-calling] behavior of the SECD machine, and more broadly of many compilers and evaluators, is principally a consequence of the early evolution of computers and computer languages; procedure calls, and particularly recursive procedure calls, were added to many computer languages long after constructs like branches, jumps, and assignment. They were thought to be expensive, inefficient, and esoteric.Guy Steele outlined this history and disrupted the canard of expensive procedure calls, showing how inefficient procedure call mechanisms could be replaced with simple “JUMP” instructions, making the space complexity of recursive procedures equivalent to that of any other looping construct.

Though OOP came from many motivations, two were central. ... [T]he small scale one was to find a more flexible version of assignment, and then to try to eliminate it altogether.

Saturday, July 30, 2011

Some nice paperz on delimited continuations and first-class macros

Three Implementation Models for Scheme is the dissertation of Kent Dybvig (of Chez Scheme fame). It contains a very nice abstract machine for (learning about) implementing a Scheme with first-class continuations. I'm currently trying to grok and implement this model, and then extend it to delimited continuations.

A Monadic Framework for Delimited Continuations contains probably the most succinct description of delimited continuations (on page 3), along with a typically hair-raising example of their use.

Adding Delimited and Composable Control to a Production Programming Environment. One of the authors is Matthew Flatt, so you know what to expect - a tour de force. The paper is about how Racket implements delimited control and integrates it with all the other features of Racket (dynamic-wind, exceptions, ...). Apropos, compared to Racket, Common Lisp is a lightweight language.

Thursday, July 28, 2011

Everything you always wanted to know about JavaScript...

Thursday, July 21, 2011

On the absence of EVAL in ClojureScript

Thursday, July 14, 2011

Frankenprogramming

John Shutt would like to break the chains of semantics, e.g. using fexprs to reach under-the-hood to wrangle and mutate the vital organs of a previously meaningful subprogram. Such a technique is workable, but I believe it leads to monolithic, tightly coupled applications that are not harmonious with nature. I have just now found a portmanteau that properly conveys my opinion of the subject: frankenprogramming.I'm looking forward to John's reply. In the meantime, I have to say that I like to view this in a more relaxed way. As Ehud Lamm said:

Strict abstraction boundaries are too limiting in practice. The good news is that one man's abstraction breaking is another's language feature.

Sunday, July 10, 2011

Atwood's Law

Any application that can be written in JavaScript, will eventually be written in JavaScript.

Friday, July 8, 2011

it is nice to have the power of all that useful but obsolete software out there

∂08-Jul-81 0232 Nowicki at PARC-MAXC Re: Switching tasks and context

Date: 6 Jul 1981 10:32 PDT

From: Nowicki at PARC-MAXC

In-reply-to: JWALKER's message of Wednesday, 1 July 1981 11:52-EDT

To: JWALKER at BBNA

cc: WorkS at AI

(via Chris, who's scouring Unix history for us)

Wednesday, June 29, 2011

PL nerds: learn cryptography instead

Monday, June 27, 2011

State of Code interview

Wednesday, June 22, 2011

John Shutt's blog

Thursday, June 16, 2011

David Barbour's soft realtime model

I'm also using vat semantics inspired from E for my Reactive Demand Programming model, with great success.

I'm using a temporal vat model, which has a lot of nice properties. In my model:

- Each vat has a logical time (

getTime).- Vats may schedule events for future times (

atTime, atTPlus).- Multiple events can be scheduled within an instant (

eventually).- Vats are loosely synchronized. Each vat sets a 'maximum drift' from the lead vat.

- No vat advances past a shared clock time (typically, wall-clock). This allows for soft real-time programming.

This model is designed for scalable, soft real-time programming. The constraints on vat progress give me an implicit real-time scheduler (albeit, without hard guarantees), while allowing a little drift between threads (e.g. 10 milliseconds) can achieve me an acceptable level of parallelism on a multi-core machine.

Further, timing between vats can be deterministic if we introduce explicit delays based on the maximum drift (i.e. send a message of the form '

doSomething `atTime` T' where T is the sum of getTime and getMaxDrift.

Previously: Why are objects so unintuitive

Sunday, June 12, 2011

Everything in JavaScript, JavaScript in Everything

Friday, June 10, 2011

No No No No

assert( top( o-------o

|L \

| L \

| o-------o

| ! !

! ! !

o | !

L | !

L| !

o-------o ) == ( o-------o

| !

! !

o-------o ) );

Multi-Dimensional Analog Literals

Tuesday, June 7, 2011

WADLER'S LAW OF LANGUAGE DESIGN

In any language design, the total time spent discussing(source, via Debasish Ghosh)

a feature in this list is proportional to two raised to

the power of its position.

0. Semantics

1. Syntax

2. Lexical syntax

3. Lexical syntax of comments

(That is, twice as much time is spent discussing syntax

than semantics, twice as much time is spent discussing

lexical syntax than syntax, and twice as much time is

spent discussing syntax of comments than lexical syntax.)

Saturday, May 28, 2011

Extreme software

Ouch

Few companies that installed computers to reduce the employment of clerks have realized their expectations.... They now need more, and more expensive clerks even though they call them "operators" or "programmers."— Peter F. Drucker

Wednesday, May 18, 2011

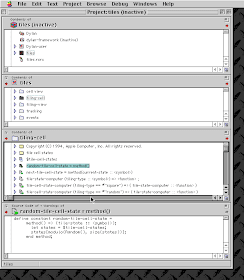

TermKit

The real modern question for programmers is what we can do, given that we actually have computers. Editors as flexible paper won't cut it.

The editor for FunO's Dylan product -- Deuce -- is the next generation of Zwei in many ways. It has first class polymorphic lines, first class BPs [buffer pointers], and introduces the idea first class "source containers" and "source sections". A buffer is then dynamically composed of "section nodes". This extra generality costs in space (it takes about 2 bytes of storage for every byte in a source file, whereas gnuemacs and the LW editor takes about 1 byte), and it costs a little in performance, but in return it's much easier to build some cool features:

- Multiple fonts and colors fall right out (it took me about 1 day to get this working, and most of the work for fonts was because FunO Dylan doesn't have built-in support for "rich characters", so I had to roll my own).

- Graphics display falls right out (e.g., the display of a buffer can show lines that separate sections, and there is a column of icons that show where breakpoints are set, where there are compiler warnings, etc. Doing both these things took less than 1 day, but a comparable feature in Zwei took a week. I wonder how long it took to do the icons in Lucid's C/C++ environment, whose name I can't recall.)

- "Composite buffers" (buffers built by generating functions such as "callers of 'foo'" or "subclasses of 'window') fall right out of this design, and again, it took less than a day to do this. It took a very talented hacker more than a month to build a comparable (but non-extensible) version in Zwei for an in-house VC system, and it never really worked right.

Of course, the Deuce design was driven by knowing about the sorts of things that gnuemacs and Zwei didn't get right (*). It's so much easier to stand on other people shoulders...

Saturday, May 14, 2011

Secret toplevel

(#' is EdgeLisp's code quotation operator.)

Wednesday, May 11, 2011

The why of macros

The entire point of programming is automation. The question that immediately comes to mind after you learn this fact is - why not program a computer to program itself? Macros are a simple mechanism for generating code, in other words, automating programming. [...]

This is also the reason why functional programming languages ignore macros. The people behind them are not interested in programming automation. [Milner] created ML to help automate proofs. The Haskell gang is primarily interested in advancing applied type theory. [...]

Adding macros to ML will have no impact on its usefulness for building theorem provers. You can't make APL or Matlab better languages for working with arrays by adding macros. But as soon as you need to express new domain concepts in a language that does not natively support them, macros become essential to maintaining good, concise code. This IMO is the largest missing piece in most projects based around domain-driven design.

Tuesday, May 10, 2011

Hygiene in EdgeLisp

(defmacro swap (x y)

#`(let ((tmp ,x))

(setq ,x ,y)

(setq ,y tmp)))

nil

(defvar x 1)

1

(defvar tmp 2)

2

(swap x tmp)

1

x

2

tmp

1

(swap tmp x)

1

x

1

tmp

2

Sunday, May 8, 2011

Compiling, linking, and loading in EdgeLisp

Let's compile a FASL from a piece of code:

(defvar fasl (compile #'(print "foo")))

#[fasl [{"execute":"((typeof _lisp_function_print !== \"undefined\" ? _lisp_function_print : lisp_undefined_identifier(\"print\", \"function\", undefined))(null, \"foo\"))"}]]

(Note that EdgeLisp uses #' and #` for code quotation.)

The FASL contains the compiled JavaScript code of the expression (print "foo").

We can load that FASL, which prints foo.

(load fasl)

foo

nil

Let's create a second FASL:

(defvar fasl-2 (compile #'(+ 1 2)))

#[fasl [{"execute":"((typeof _lisp_function_P !== \"undefined\" ? _lisp_function_P : lisp_undefined_identifier(\"+\", \"function\", undefined))(null, (lisp_number(\"+1\")), (lisp_number(\"+2\"))))"}]]

(load fasl-2)

3

Using link we can concatenate the FASLs, combining their effects:

(defvar linked-fasl (link fasl fasl-2))

(load linked-fasl)

foo

3

#[fasl [

execute:

(typeof _lisp_variable_nil !== "undefined" ? _lisp_variable_nil : lisp_undefined_identifier("nil", "variable", undefined))

compile:

Thursday, May 5, 2011

Land of Lisp - The Music Video!

Via Book Review: Land of LISP, by Conrad Barski via @zooko.

Wednesday, May 4, 2011

Unlimited number of runtimes

Friday, April 29, 2011

on quotation

Lisp literals (booleans, numbers, strings, ...) are sometimes called self-evaluating or self-quoting objects.

And that's really the use-mention distinction.

Thursday, April 28, 2011

A new Cambrian explosion

But I think all of these developments will be dwarfed by the emergence of the browser as the unified display substrate and JavaScript as the new instruction set.

The last time we had a similar event was the introduction of the GUI in the 80's/90's: millions of programmers scrambled to write every imaginable application for this new system

But the GUI explosion will be peanuts compared to the browser+JS explosion! Not only are there far more programmers today, they're also all joined by the internet now. And there's ubiquitous view source. Need I say more??

Millions of programmers are scrambling right now to write every imaginable application for the browser+JS.

If you ain't emittin' HTML and JS, you're gonna miss out on this once-in-a-geological-era event!

R7RS discussion warming up

For example, '幺' (U+5e7a) has Numeric_Type = 'Numeric', since the character means small or young, so it can sometimes mean 1 in some specific context (for Japanese, probably the only place it means '1' is in some Mah-jong terms.) So, when I'm scanning a string and found that char-numeric? returns #t for a character, and that character happens to '幺' (U+5e7a), and then what I do? It is probably a part of other word so I should treat it as an alphabetic character. And even if I want to make use of it, I need a separate database to look up to know what number '幺' is representing. — Shiro Kawai of Gauche

Taylor Campbell's blag

For example, he clears up my confusion about UNWIND-PROTECT vs Continuations:

The difference [to DYNAMIC-WIND] is in the intent implied by the use(And Dorai Sitaram says: UNWIND-PROTECT "cannot have a canonical, once-and-for-all specification in Scheme, making it important to allow for multiple library solutions".)

of UNWIND-PROTECT that if control cannot re-enter the protected

extent, the protector can be immediately applied when control exits

the protected extent even if no garbage collection is about

to run finalizers.

Note that one cannot in general reconcile

(1) needing to release the resource immediately after control exits

the extent that uses it, and

(2) enjoying the benefits of powerful control abstractions such as

inversion of control.

However, if (1) is relaxed to

(1') needing to release the resource immediately after control

/returns normally from/ the extent that uses it,

then one can reconcile (1') and (2) by writing

(CALL-WITH-VALUES (LAMBDA () <resource-usage>)

(LAMBDA RESULTS

<resource-release>

(APPLY VALUES RESULTS))), or simply

(BEGIN0 <resource-usage> <resource-release>)

using the common name BEGIN0 for this idiom. (In Common Lisp, this

is called PROG1.)

Wednesday, April 27, 2011

What's a condition system and why do you want one?

Exception handling

try {

throw new Exception();

catch (Exception e) {

... // handler code

}Everybody knows what this exception handling code in Java does: the THROW searches for a CATCH clause (a handler) that catches subclasses of Exception, unwinds the stack, and calls the handler code with E bound to the exception object.At the moment the exception is thrown, the stack looks like this:

- ... // outside stack

- TRY/CATCH(Exception e)

- ... // middle stack

- THROW new Exception()

Before the handler is called, the stack is unwound:

- ... // outside stack

- TRY/CATCH(Exception e)

... // middle stackTHROW new Exception()

- ... // outside stack

- TRY/CATCH(Exception e)

- ... // handler code

Condition systems in the Lisp family are based on the fundamental insight that calling a handler can be decoupled from unwinding the stack.

Imagine the following:

try {

throw new Exception();

} handle(Exception e) {

... // handler code

}We've added a new keyword to Java, HANDLE. HANDLE is just like CATCH, except that the stack is not unwound when an Exception is thrown.With this new keyword, the stack looks like this when the handler is called:

- ... // outside stack

- TRY/HANDLE(Exception e)

- ... // middle stack

- THROW new Exception()

- ... // handler code

For many exceptions it makes sense to simply unwind the stack, like ordinary exception handling does. But for some exceptions, we gain a lot of power from the non-unwinding way condition systems enable.

Restarts

One of the most interesting aspects of not automatically unwinding the stack when an exception occurs is that the we can restart the computation that raised an exception.

Let's imagine two primitive API functions: GETVAL and SETVAL read and write a VAL variable.

Object val = null;Now we want to add the contract that GETVAL should never return null. When VAL is NULL, and GETVAL is called then an exception is raised:

Object getVal() { return val; }

void setVal(Object newVal) { val = newVal; }

Object getVal() {

if (val == null) throw new NoValException();

else return val;

}A user of GETVAL may install a handler like this:try {

getVal();

} handle (NoValException e) { // note use of HANDLE, not CATCH

... // handler code

}When GETVAL() is called and VAL is null, an exception is thrown, our handler gets called, and the stack looks like this:- ... // outside stack

- TRY/HANDLE(NoValException e)

- getVal()

- THROW new NoValException()

- ... // handler code

Thanks to the non-unwinding nature of condition systems, an application may decide to simply use a default value, when GETVAL is called and VAL is null.

We do this using restarts, which are simply an idiomatic use of non-unwinding exceptions:(1)

We rewrite GETVAL to provide a restart for using a value:

Object getVal() {

try {

if (val == null) throw new NoValException();

else return val;

} catch (UseValRestart r) {

return r.getVal();

}

}GETVAL can be restarted by throwing a USEVALRESTART whose value will be returned by GETVAL. (Note that we use CATCH and not HANDLE to install the handler for the restart.) In the application:

try {

getVal();

} handle (NoValException e) {

throw new UseValRestart("the default value");

}(The USEVALRESTART is simply a condition/exception that can be constructed with a value as argument, and offers a single method GETVAL to read that value.)Now, when GETVAL() is called and VAL is null, the stack looks like this:

- ... // outside stack

- TRY/HANDLE(NoValException e)

- getVal()

- TRY/CATCH(UseValRestart r) [1]

- THROW new NoValException()

- THROW new UseValRestart("the default value") [2]

- ... // outside stack

- TRY/HANDLE(NoValException e)

- getVal()

- TRY/CATCH(UseValRestart r)

THROW new NoValException()THROW new UseValRestart("the default value")- return r.getVal(); // "the default value"

A simple extension would be to offer a restart for letting the user interactively choose a value:

Object getVal() {

try {

if (val == null) throw new NoValException();

else return val;

} catch (UseValRestart r) {

return r.getVal();

} catch (LetUserChooseValRestart r) {

return showValDialog();

}

}This assumes a function SHOWVALDIALOG that interactively asks the user for a value, and returns that value. The application can now decide to use a default value or let the user choose a value.Summary

The ability to restart a computation is gained by decoupling calling a handler from unwinding the stack.

We have introduced a new keyword HANDLE, that's like CATCH, but doesn't unwind the stack (HANDLE and CATCH are analogous, but not equal, to Common Lisp's handler-bind and handler-case, respectively).

HANDLE is used to act from inside the THROW statement. We have used this to implement restarts, a stylized way to use exceptions.

I hope this post makes clear the difference between ordinary exception handling, and Lisp condition systems, all of which feature restarts in one form or another.

Further reading:

- A tale of restarts - comp.lang.dylan message by Chris Double ("This is a "I'm glad I used Dylan" story...")

- Exceptional Situations In Lisp and Condition Handling in the Lisp Language Family - increasingly detailed papers by Kent Pitman

Footnotes:

(1) This is inspired by Dylan. Common Lisp actually treats conditions and restarts separately.