For the discussion of interactive programming languages, I'm now hanging out on #interactiveprogramming on chat.freenode.net IRC.

You're all happily invited to join!

Showing posts with label hypercode. Show all posts

Showing posts with label hypercode. Show all posts

Thursday, May 17, 2012

Tuesday, May 15, 2012

Back to the future

Roly Perera, who's doing awesome work on interactive programming is apparently taking up blogging again. Yay!

Back to the future

I know not of these “end users” of whom you speak. There is only programming. – Unknown programmer of the futureJonathan Edwards recently wrote that an IDE is not enough. To break out of our current commitment to text files and text editors requires a new programming paradigm, not just a fancy IDE. I feel the same. I’ve spent the last two and a half years moving my interactive programming work forward, and I feel I’m now ready to start sharing. Bret Victor blew us away with his influential, Jonathan-Edwards-inspired, mindmelt of a demo but was conspicuously quiet on how to make that kind of stuff work. (Running and re-running fragments of imperative code that spit out effects against a shared state, for example, does not a paradigm make, even if it can be made to work most of the time. “Most of time” is not good enough.) I won’t be blowing anyone’s minds with fancy demos, unfortunately, but over the next few months I’ll be saying something about how we can make some of this stuff work. I’ve been testing out the ideas with a proof-of-concept system called LambdaCalc, implemented in Haskell.

Friday, April 27, 2012

Physical code

Roly Perera, who is doing awesome work on continuously executing programs and interactive programming, tweeted that we should take inspiration from nature for the next generation of PLs:

- Lesson #1: it's physical objects all the way down. #

- Lesson #2: there is only one "from-scratch run", and we're living in it. #

- Lesson #3: there are no black boxes. #

The first point is one I'm thinking about in the context of what I call hypercode.

Common Lisp is - as usual - an interesting example: with its Sharpsign Dot macro character, one can splice in real objects into the source code loaded from a file.

E.g. if you put the text (* #.(+ 1 2) 3) into a file, the actual code when the file is loaded is (* 3 3).

And with Sharpsign Equal-Sign and Sharpsign Sharpsign, we can construct cyclic structures in files: '#1=(foo . #1#) reads as a pair that contains the symbol foo as car, and itself as cdr: #1=(FOO . #1#) (don't forget to set *print-circle* to true if you don't want to get a stack overflow in the printer).

The question I'm thinking about is: what happens if source code consists of the syntax objects themselves, and not their representations in some format? Is it just more of the same, or a fundamental change?

For example: what if you have a language with first-class patterns and you can now manipulate these patterns directly - i.e. they present their own user interface? Is this a fundamental change compared to text or not?

As Harrison Ainsworth tweeted:

For example: what if you have a language with first-class patterns and you can now manipulate these patterns directly - i.e. they present their own user interface? Is this a fundamental change compared to text or not?

As Harrison Ainsworth tweeted:

- Is parsing not an illusory problem caused by using the wrong data structure? #

Wednesday, April 18, 2012

The Supposed Primacy of Text

As should be obvious to my regular readers, I'm using the Axis of Eval to post raw thoughts. I don't do the work of rigorously thinking these thoughts through. I'm trying to narrate my mental work, in whatever state it is at the moment, with the hope to engage in dialogue with those of you with similar interests.

With this disclaimer out of the way, another take on the text/graphical axis.

In a comment on The Escape from the Tyranny of the Typewriter, John writes:

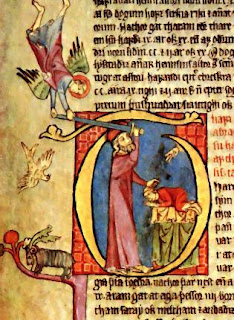

Okay, I'll play devil's advocate here. Text is the most articulate form of expression we have; it's so potent that even your graphical representation actually resorts to it. If you're going to introduce something else as well, that reduces simplicity, so there should be a very clear reason for it, and what you introduce should be in some sense manifestly The Right Thing.But the plain text we use with computers is hugely impoverished, compared to pre-computer texts. Compare to Dadaists' use of text (the art at beginning of text) or religious texts, which were widely "scribbled in the margins", and adorned with many graphical features.

Or take mathematical text: it has rich, mostly informal structure for conveying information:

This brings us to computers' favorite: a sequence of code-points. That is simply in no way adequate to represent the exploits of the past, not to speak of the future.

Modern mathematicians are shoehorned into writing their fomulas in LaTex and having them presented to them in ASCII art in their mathematical REPLs.

So let's turn the tables: Plain text is a particular special case of using a general purpose graphical display to display sequences of code points. Furthermore, plain text stored in a file is also just a special case of arbitrary information stored in a file.

Sunday, April 15, 2012

Escape from the Tyranny of the Typewriter

One misconception of computing is that plain text is somehow the natural thing for a computer to process. False. It only appears that way, because even the lowest layers of our computing infrastructure are already toolstrapped to work with the bit patterns representing plain text.

Thinking about graphical syntax, combined with the brain stimulating powers of Kernel, it occurred to me that in a fundamental sense,

(+ 1 2) is really just a shorthand for (eval (list + 1 2) (get-current-environment))(Note that I'm assuming the existence of a Sufficiently Smart Partial Evaluator in all my investigations. As history shows, after the 5 decades it takes for Lisp ideas to become mainstream, it's perfectly possible to implement them efficiently.)

This harks back to my previous post about using and mentioning syntax. The (+ 1 2) is the way we directly use the syntax, whereas (eval (list + 1 2) (get-current-environment)) is the way we use mentioned syntax.

But why have two? Obviously, in a typewriter-based syntax, writing (eval (list + 1 2) (get-current-environment)) all the time would be much too cumbersome. But in a graphical syntax, the difference between the two might be just a different border color for the corresponding widgets.

This means: maybe we don't even need a way to use syntax, and can get by with just a way of mentioning it.

Put differently: In typewriter-based syntax, we cannot afford to work with mentioned syntax all the time, because we would need to wrap it in (eval ... (get-current-environment)) to actually use it. A graphical syntax would make it possible to work solely with mentioned syntax, and indicate use in a far more lightweight way (e.g. a different color).

But maybe that's bull.

What is Lisp syntax, really, and the use-mention distinction

In a recent comment, John Shutt wrote:

I think the cornerstone of Lisp, wrt to syntax, is that the barrier between using a piece of syntax and mentioning it is very low.

When I write (+ 1 2), I use the syntax to compute 3. But when I write '(+ 1 2) I just mention it. Note that the difference between use and mention is just one small sign, the quote '.

Let's take a piece of syntax -- corresponding to (let ((x 1) (y 2)) (+ x y)) -- in a hypothetical graphical syntax:

I do agree that the success of Lisp depends quite heavily on the fact that it has no syntax dedicated to semantics. But this means that, to the extent that a language introduces syntax dedicated to semantics, to just that extent the language becomes less Lisp.After a couple of days of thinking about this on and off, in the context of hypercode/graphical syntax, I came to these thoughts:

I think the cornerstone of Lisp, wrt to syntax, is that the barrier between using a piece of syntax and mentioning it is very low.

When I write (+ 1 2), I use the syntax to compute 3. But when I write '(+ 1 2) I just mention it. Note that the difference between use and mention is just one small sign, the quote '.

Let's take a piece of syntax -- corresponding to (let ((x 1) (y 2)) (+ x y)) -- in a hypothetical graphical syntax:

| let |

|

||||

| + x y |

The green border around the variable bindings, and their gray background is meant to indicate that the set of bindings is not just usual Lisp list-based syntax, but rather that we're using a special widget here, that affords convenient manipulation of name-value mappings.

This point is important. The graphical syntax is not equivalent to (let ((x 1) (y 2)) (+ x y)) -- it doesn't use lists to represent the LET's bindings, but rather a custom widget. The LET itself and the call to + do use "lists", or rather, their graphical analogue, the gray bordered boxes.

So it seems that we have introduced syntax dedicated to semantics, making this language less Lisp, if John's statement is true.

But I believe this is the wrong way to look at it. Shouldn't we rather ask: does this new syntax have the same low barrier between using and mentioning it, as does the existing Lisp syntax?

Which leads to the question: how can we add new (possibly graphical) syntax to Lisp, while keeping the barrier between use and mention low?

In conclusion: my hunch is that we can add syntax to Lisp, without making it less Lisp, as long as the new syntax can be mentioned as naturally as it can be used.

Follow-up: Escape from the Tyranny of the Typewriter.

HN discussion

This point is important. The graphical syntax is not equivalent to (let ((x 1) (y 2)) (+ x y)) -- it doesn't use lists to represent the LET's bindings, but rather a custom widget. The LET itself and the call to + do use "lists", or rather, their graphical analogue, the gray bordered boxes.

So it seems that we have introduced syntax dedicated to semantics, making this language less Lisp, if John's statement is true.

But I believe this is the wrong way to look at it. Shouldn't we rather ask: does this new syntax have the same low barrier between using and mentioning it, as does the existing Lisp syntax?

Which leads to the question: how can we add new (possibly graphical) syntax to Lisp, while keeping the barrier between use and mention low?

In conclusion: my hunch is that we can add syntax to Lisp, without making it less Lisp, as long as the new syntax can be mentioned as naturally as it can be used.

Follow-up: Escape from the Tyranny of the Typewriter.

HN discussion

Thursday, April 5, 2012

Fear and Loathing at the Lisp Syntax-Semantics Interface

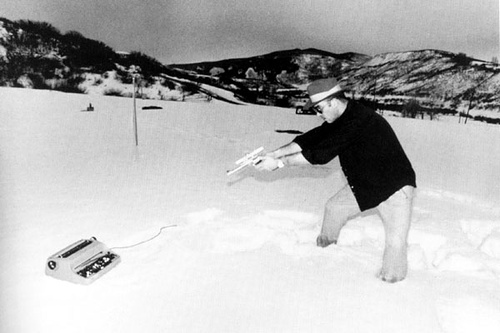

You poor fool! Wait till you see those goddamn parentheses.

Recent investigations of xonses and first-class patterns have driven home an important point:

Part of Lisp's success stems from its heavy use of puns.

Take (LET ((X 1) (Y 2)) (+ X Y)) for example. The pun here is that the same syntactic device - parentheses - is used to express two wildly different things: calls to the LET and + combiners on the one hand, and a list of bindings on the other.

Let's step back from Lisp's typewriter-based syntax for a moment, and imagine a graphical 2D syntax and a convenient 2Dmacs hypercode editor. Since we're now fully graphic, fully visual, it makes little sense to construct lists. We construct real widgets. So we would have one widget for "list of bindings" and all kinds of snazzy displays and keyboard shortcuts for working with that particular kind of form.

We don't have that editor yet, but still it makes sense to think about first-class forms. Right now, we restrict ourselves to lists and atoms. But why shouldn't the list of bindings of a LET be a special form, with a special API? E.g. we could write (LET [bindings [binding X 1] [binding Y 2]] (+ X Y)). Of course this is horrible. But look back at the imaginary 2D editor again. There we wouldn't have to write the [bindings [binding ... stuff. We would be manipulating a first-class form, a special GUI widget for manipulating a list of bindings...

Sunday, April 1, 2012

First-class patterns

A good sign: xonses are already making me think previously impossible thoughts.

In my Kernel implementation Virtua, every object can potentially act as a left-hand side pattern.

A pattern responds to the message match(lhs, rhs, env) -- where the LHS is the pattern -- performs some computation, and possibly updates env with new bindings. The rules for Kernel's built-ins are:

In my Kernel implementation Virtua, every object can potentially act as a left-hand side pattern.

A pattern responds to the message match(lhs, rhs, env) -- where the LHS is the pattern -- performs some computation, and possibly updates env with new bindings. The rules for Kernel's built-ins are:

- #ignore matches anything, and doesn't update the environment.

- Symbols match anything, and update the environment with a new binding from the symbol to the RHS.

- Nil matches only nil, and doesn't update the environment.

- Pairs match only pairs, and recursively match their car and cdr against the RHS's car and cdr, respectively.

- All other objects signal an error when used as a pattern.

With these simple rules one can write e.g. a lambda that destructures a list:

(apply (lambda (a b (c d)) (list a b c d))

(list 1 2 (list 3 4)))

==> (1 2 3 4)

(apply (lambda (a b (c d)) (list a b c d))

(list 1 2 (list 3 4)))

==> (1 2 3 4)

Now, why stop at these built-ins? Why not add first-class patterns? We only need some way to plug them into the parsing process...

An example: In Common Lisp, we use type declarations in method definitions:

(defmethod frobnicate ((a Foo) (b Bar)) ...)

(a Foo) means: match only instances of Foo, and bind them to the variable a.

Generalizing this, we could write:

(defmethod frobnicate ([<: a Foo] [<: b Bar]) ...)

The square brackets indicate first-class patterns, and in Lisp fashion, every first-class pattern has an operator, <: in this case. The parser simply calls <: with the operands list (a Foo), and this yields a first-class pattern, that performs a type check against Foo, and binds the variable a.

Another example would be an as pattern, like ML's alias patterns or Common Lisp's &whole, that lets us get a handle on a whole term, while also matching its subterms:

(lambda [as foo (a b c)] ...)

binds foo to the whole list of arguments, while binding a, b, and c to the first, second, and third element, respectively.

Another example would be destructuring of objects:

(lambda ([dest Person .name n .age a]) ...)

would be a function taking a single argument that must be an instance of Person, and binds n and a to its name and age, respectively.

With first-class patterns, all of these features can be added in luserland.

Another example would be destructuring of objects:

(lambda ([dest Person .name n .age a]) ...)

would be a function taking a single argument that must be an instance of Person, and binds n and a to its name and age, respectively.

With first-class patterns, all of these features can be added in luserland.

Subscribe to:

Posts (Atom)