One misconception of computing is that plain text is somehow the natural thing for a computer to process. False. It only appears that way, because even the lowest layers of our computing infrastructure are already toolstrapped to work with the bit patterns representing plain text.

Thinking about graphical syntax, combined with the brain stimulating powers of Kernel, it occurred to me that in a fundamental sense,

(+ 1 2) is really just a shorthand for (eval (list + 1 2) (get-current-environment))(Note that I'm assuming the existence of a Sufficiently Smart Partial Evaluator in all my investigations. As history shows, after the 5 decades it takes for Lisp ideas to become mainstream, it's perfectly possible to implement them efficiently.)

This harks back to my previous post about using and mentioning syntax. The (+ 1 2) is the way we directly use the syntax, whereas (eval (list + 1 2) (get-current-environment)) is the way we use mentioned syntax.

But why have two? Obviously, in a typewriter-based syntax, writing (eval (list + 1 2) (get-current-environment)) all the time would be much too cumbersome. But in a graphical syntax, the difference between the two might be just a different border color for the corresponding widgets.

This means: maybe we don't even need a way to use syntax, and can get by with just a way of mentioning it.

Put differently: In typewriter-based syntax, we cannot afford to work with mentioned syntax all the time, because we would need to wrap it in (eval ... (get-current-environment)) to actually use it. A graphical syntax would make it possible to work solely with mentioned syntax, and indicate use in a far more lightweight way (e.g. a different color).

But maybe that's bull.

You might want to consider looking at a few environments which eschew these conventions at some level:

ReplyDeleteColorforth doesn't use ASCII, and compiles as you type.

Self has had a fully active live object editor since the early 90s, and most Self programmers rarely use text files. Morphic is probably the first true graphical programming environment. Hell bezels were invented by its creator!

Smalltalk can use text files, but they "burned the disk packs" years ago. Etoys and Scratch get pretty far from the typewriter. The fact that you have a keyboard is about the only thing that's the same.

Sadly, nothing I've listed above is new. It ranges from the late 60s (Forth) to the early 90s (Self), and most people still code as if it were the days of Algol!

Okay, I'll play devil's advocate here. Text is the most articulate form of expression we have; it's so potent that even your graphical representation actually resorts to it. If you're going to introduce something else as well, that reduces simplicity, so there should be a very clear reason for it, and what you introduce should be in some sense manifestly The Right Thing.

ReplyDeleteThe one thing that text and lists have in common, that visual forms might *perhaps* avoid, is sequentialism. That gets into very deep waters indeed; I don't claim sequential is "better than" parallel, but I do think there's *something* profoundly valuable about the sequential side of things that is being overlooked in the current fad for parallelism. At some point perhaps I'll even figure out just what that something is.

We could have multidimensional widget-grammars, complete with color information. We would still want a hundred problem-specific languages - describing maps, dialog, sounds, video editing, character animation, etc.

ReplyDeleteStructured programming seems to me orthogonal to syntactic abstraction.

This is the same thing I say when I said that parentheses in lisp were just editor commands to navigate the syntactic tree in http://groups.google.com/group/comp.lang.lisp/msg/3050088218d355e5

ReplyDeleteNow the question is what is more ergonomic. A gesture based or anything else, user interface, where you need to close the feed back loop between each interaction, or a keyboard based user interface where you can input text on the run, and check whether it's correct in a parallel process with delayed time correction?

I think the later is more ergonomic and I think that's the reason why structure editors haven't taken over.

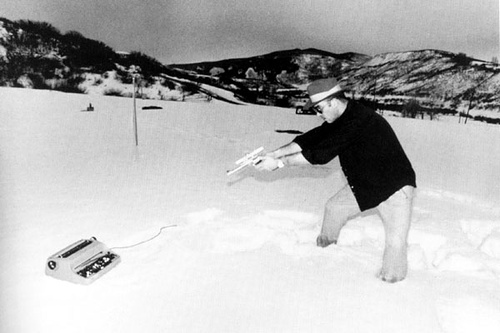

Now of course if the question is to do something WITHOUT a keyboard, using touch gesture, speach, visual/3D gestures (using the camera or other 3D input device), it will be easier to use those input with a structured editor than to try to enter text. (But that still may be an option, have a look at: http://en.wikipedia.org/wiki/Projection_keyboard one could write a camera-keyboard even without the projection, if the user's imagination is strong enough).

That said, a picture is worth a thousand words, gesture can be convenient, instead of entering coordinates or size, you could draw them with a mouse or your finger. The future is to multi-modal user interfaces.