Sunday, August 28, 2011

Thursday, August 25, 2011

SRII 2011 - Keynote Talk by Alan Kay

Another awesome talk by Alan "I invented the term" Kay, after the recent Programming and Scaling.

"The internet is practically the only real object-oriented system on the planet." (~30:00)

SRII 2011 - Keynote Talk by Alan Kay - President, Viewpoints Research Institute from SRii GLOBAL CONFERENCE 2011 on Vimeo.

Also, the notion of reinventing the flat tire can't get enough airtime.

Tuesday, August 23, 2011

Totally awesome: FOSS Patents: Samsung cites "2001" movie as prior art against iPad patent

Attached hereto as Exhibit D is a true and correct copy of a still image taken from Stanley Kubrick's 1968 film "2001: A Space Odyssey." In a clip from that film lasting about one minute, two astronauts are eating and at the same time using personal tablet computers. The clip can be downloaded online at http://www.youtube.com/watch?v=JQ8pQVDyaLo. As with the design claimed by the D’889 Patent, the tablet disclosed in the clip has an overall rectangular shape with a dominant display screen, narrow borders, a predominately flat front surface, a flat back surface (which is evident because the tablets are lying flat on the table's surface), and a thin form factor.

(via @chris_stevenson:@stilkov)

Sunday, August 21, 2011

Software is a branch of movies

A post by HXA about the ideology of software engineering prompted me to re-read an old Ted Nelson essay. Some choice quotes:

"Virtual" does not mean, as many think, three-dimensional. It is the opposite of *real*.

Virtuality therefore means the *apparent structure of things*

Engineers generally deal with constructing reality. Movie-makers and software designers deal with constructing virtuality.

Software is a branch of movies.

Movies enact events on a screen that affect the heart and mind of a viewer. Software enacts events on a screen which affect the heart and mind of a user, AND INTERACT.

Saturday, August 20, 2011

Praising Kernel

I love Kernel! Let's face it: While Paul Graham has reanimated Lisp, Lisp has become stuck in a rut. CL hasn't changed since '94, and it probably won't, and heck, that's even a good thing - CL is the mud ball of strength, the acting patriarch of the Lisp family. There's a better chance of CL being around in 50 years than most other programming languages. The same for Scheme, although it's in a bit of an identity crisis right now. But what's crazy about Scheme is that it has become focused on ahead-of-time compilation, in a world where people run Linux in JavaScript JIT compilers. One of the purposes of Scheme should be to push compiler writers to be smarter. Let's forget all that static, performance-oriented stuff, let's make it as dynamic and general and simple as it can get, and spend the following decades on amazing new tech to make it fast! That's what Scheme's about, right? And let's not talk about Clojure, they don't even "see the need" for EVAL [in ClojureScript]. So much for the state of Lisp. Can you see it? We need to put the fun, nasty fun, back into Lisp. Lisp has been resting on its well-deserved laurels for much too long. Heck, even Java is getting lambdas now, and there's talk of macros in JavaScript. Lisp's purpose in the programming language galaxy is to assist our most gifted fellow humans in thinking previously impossible thoughts, remember? Do you really think current Lisps are up to that task?

So where does Kernel fit in? In short, I think Kernel is as close as you can get to the essence, the rhyming scheme of Lisp. Whereas the R6RS doesn't even mention interactive development, Kernel is squarely interactive. And it provides crystal clear semantics to go with it. LETREC blackhole? Hopeless toplevel? Not in Kernel. First-class environments solve these issues. Look, Kernel has a single form, $vau, that is as powerful as lambda, define-syntax, and let-syntax combined! (Strike that, it's even more powerful - you can use Kernel's macros, fexprs, just like ordinary functions.) Need I say more? Kernel can truly bootstrap itself from its 3 (or less) built-in forms (counting them is not easy), without the need for a separate expansion process, and without the need for a hygiene system - Kernel is simply inherently hygienic (when used right). Another form, $define!, not only takes on the roles of define and set!, but also doubles, nay, triples, nay, n-tuples, as a primitive for arbitrary namespace manipulation - you can build any module system you like on top of this single primitive! Think about it? Can your language do that? Fat chance! But should your Lisp be able to do that? I'd say. Now, you say, but no one has written real applications in Kernel yet. I say: that's what's so cool about it! It's a brave new world, without trodden paths, full of new discoveries in semantics, implementation technologies, and other things we can't even see yet. In the past 50 years, Lisp has succeeded in bringing object-orientation, dynamism, functional programming, and other niceties to the philistines. Soon, they'll even have macros and EVAL. Now it's time for Lisp to move another 50 years forward. That's what Lisp is for! And as far as I can see, Kernel is the vehicle for that. So fire up your printers, print out John Shutt's amazing works Fexprs as the basis of Lisp function application; or, $vau: the ultimate abstraction and the Revised -1 Report on the Kernel Programming Language, bind them, study them, and start hacking on Lisp's future! It's easy (nah, OK, it's hard) and it starts with you!

Friday, August 19, 2011

Virtua subjotting

I'm keeping a quite detailed log of Virtua on the new Subjot service: http://subjot.com/manuel/virtua.

(Subjot is an interesting new microblogging service, where every user has multiple streams, and you can choose which to follow. Here's an invite. I have no affiliation with Subjot.)

The Virtua programming language

Virtua will be my next programming language, with the following core features:

- combined object lambda architecture

- delimited continuations

- fexprs

- plus, of course, all the good stuff we're used to from Lisp: sane syntax, numerical tower, ...

Virtua will be written in JavaScript. The goal is to make Virtua a good command language for interactive, extensible, browser-based applications, like an Emacs for hypermedia.

If you're interested to work with me on Virtua, do get in touch!

Thursday, August 18, 2011

Notes on delimited continuations

Delimited continuations are one of those topics where you need to spend months or maybe even years in the cloud of unknowing, until it suddenly makes click. At least, that's this autodidact's experience.

Delimited continuations are awesome, because they let us abstract over control flow - as I like to put it one man's control flow is another man's data, or as Chung-chieh Shan puts it: delimited continuations liberate control flow.

Continuations as we know them (i.e. those created by call/cc) are undelimited, they represent the whole rest of the computation. Delimited continuations represent the rest of a sub-computation up to a given prompt. They come from investigating REPLs: when you capture an undelimited continuation, the continuation includes the REPL's control flow! Generally, you don't want that, so with delimited continuations, the REPL can install a prompt before executing code entered by the user, and then you can capture a delimited continuation just up to that prompt, excluding the REPL's code.

My favorite description of delimited continuations is offered right at the start of A Monadic Framework for Delimited Continuations, even though the rest of the paper is quite over my head. Their API for delimited continuations is:

- newPrompt --- creates a fresh prompt, distinct from all other prompts. It's just an object that we use as an identifier, basically. Some systems simply use symbols for prompts.

- pushPrompt p e --- pushes prompt p on the stack, and executes expression e in this new context. This delimits the stack, so we can later capture a delimited continuation up to this part of the stack.

- withSubCont p f --- aborts (unwinds the stack) up to and including the prompt p, and calls the function f with a single argument k representing the delimited continuation from the call to withSubCont up to but not including the prompt. This captures a delimited continuation, analogous to how call/cc captures an undelimited continuation.

- pushSubCont k e --- pushes the delimited continuation k on the stack, and executes expression e in this new context. This composes the stack of k with the current stack, analogous to how calling the function representing a continuation in Scheme swaps the current stack with the stack of that continuation.

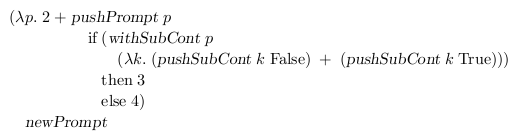

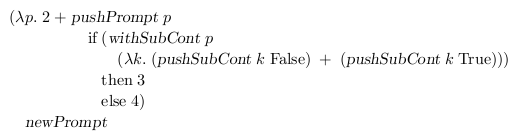

Now to the example! Delimited continuation examples are always hair-raising!! Brace yourself!!!

So, the first thing we do is call newPrompt (at the bottom) to obtain a fresh prompt, and then we pipe that into the function (at the top), so that we have a name, p, for the prompt. Inside that function we have the addition of 2 to a second expression, that pushPrompt's p on the stack, i.e. delimits the stack at this point. The if is called inside the new context delimited by p. Now it gets hairy: The if's test expression is a withSubCont, so it immediately aborts up to the outer pushPrompt and then calls the function that is its second argument with k bound to the rest of the computation between the withSubCont and the pushPrompt. What's the rest of that computation?? Well, by the magic of delimited control, k is now equivalent to the function λb. if b then 3 else 4. This is what I meant when I said that delimited continuations allow us to abstract over control flow - we have just turned an arbitrary, dynamic control flow sequence into a function. (Well, it's not really a function, but we can use it like one.) OK, so we have aborted back up to pushPrompt, and in k we have the rest of the computation as a function. What do we do with it? Well, we call it using pushSubCont once with False and once with True as argument, and we add the two results, giving us 7. Note that we just called the delimited continuation twice! We can not only abstract over arbitrary control flows, we can also call them as many times as we like. Now the whole shebang inside pushPrompt is over, and we return to the addition of 2 to our result of 7, giving us 9.

Previously: papers on delimited continuations.

A little Scheme interpreter

I've rewritten the Scheme interpreter from Kent Dybvig's dissertation Three Implementation Models for Scheme in JavaScript: Schampignon.

I think this is really a nice way to learn about implementing tail-calls and first-class continuations.

Schampignon is undocumented, because it's really just a rewrite of the Scheme code in section 3.4 of the dissertation, which explains things nicely. (Still, it took me a couple of days to figure out how it works.)

My next steps will be to add delimited continuations and fexprs to the code.

Going co-nuclear

I was discussing implementation inheritance on Twitter (no really!), when it dawned on me that my discussion partner was from a different "paradigm cult", and no agreement was possible.

For the Clojurian I was talking to, implementation inheritance is co-nuclear. There's probably no way for him to accept that it has valid uses.

John Shutt describes it nicely in his post Memetic Organisms:

The meme set carried by the [memetic] organism includes a mass of theories, some of which contradict each other. At the center of this mass is a nucleus of theories that are supposed to be believed (part of what Kuhn called a paradigm). Surrounding the nucleus are theories that are meant to be contrasted with the paradigm and rejected, together with memes about how to conduct the contrast; one might call this surrounding material the co-nucleus.

Sunday, August 7, 2011

Intellectual badass Sunday reading

"Nerd? We prefer the term intellectual badass." – UnknownAMD Fusion Developer Summit Keynotes, about AMD's upcoming "Cray on a Chip". Basically, they'll make the parallel processors (formerly known as GPUs) coherent with the brawny sequential CPUs, allowing you to "switch the compute" between sequential and parallel, while operating on the same data (e.g. fill an array sequentially, then switch to parallel processing to crunch the data, all in the same program - and memory space).

[racket] design and use of continuation barriers, by Matthew Flatt. "Use a continuation barrier when calling unknown code and when it's too painful to contemplate multiple instantiations of the continuation leading to the call."

Tutorial: Metacompilers Part 1, about META II, the one Alan Kay and the other VPRI folks always talk about. "You won't really find metacompilers like META II in compiler textbooks as they are primarily concerned with slaying the dragons of the 1960s using 1970s formal theory."

Microsoft and the Bavarian Illuminati: "In the Object Linking and Embedding 2.0 Programmer's Reference there is a very curious term. On page 78, the second paragraph starts with the sentence, 'In the aggregation model, this internal communication is achieved through coordination with a special instance of IUnknown interface known as the /controlling unknown/ of the aggregate.'"

Saturday, August 6, 2011

Sunday, July 31, 2011

The meaning of forms in Lisp and Kernel

There are so many nice things to be learned from Lisp - and Kernel.

Take this:

But Lisp is never content with a form alone: it always wants to evaluate the form, determine its meaning.

For instance, when you input a symbol form, Lisp will not just return the symbol object - it will return the value of the variable binding referenced by the symbol - which is the symbol's meaning, per Lisp's rules of form evaluation.

No wonder then that one of the central features of Lisp is quotation - telling Lisp not to determine the meaning of a form presented to it, but rather give us the form itself.

So what's a form? A form is "any object meant to be evaluated", in other words, we may treat any object as a form, as something to evaluate, as something to determine the meaning of.

Lisp's evaluation rules do not prescribe in any way that forms are bound to text-based representations - a form is any first-class object meant to be evaluated. (And in fact, Racket, a leading Lisp implementation already supports images as forms.)

So Lisp is chiefly concerned with determining the meaning of forms read from the user.

Macros then allow us to extend the variety of forms recognized by a Lisp, beyond the ways that mere function calls can.

Where in a function call the meaning of every operand is established automatically before the function is actually called, macros have total freedom in evaluating - determining the meaning of - operands, or not evaluating them at all (as a short-circuiting AND operator may do, for example).

But Common Lisp and Scheme macros, by their preprocessing nature (a macro call works at a time conceptually different from runtime, translating source expressions to other expressions, to be evaluated at a later time), cannot be used in a first-class fashion. For example, it is not possible to apply the AND macro to a list of dynamically computed values (without resorting to EVAL), like we could with a function.

In a sense, macros of the preprocessing kind restrict our ability of determining the meaning of forms: With preprocessing macros, we may only determine the meaning of forms available at preprocessing time.

This where Kernel's fexprs come in. Fexprs drop the requirement of macros to be expandable in a separate preprocessing step. Thus, we may apply the AND fexpr to a list of dynamically computed operands, for example.

But fexprs go beyond being merely a more convenient replacement for macros. As John Shutt shows, a new lexically-scoped formulation of fexprs - as presented in Kernel - is even more fundamental to Lisp than lambda. In Kernel, lambda is defined in terms of vau, the ultimate abstraction:

($define! $lambda

($vau (formals . body) env

(wrap (eval (list* $vau formals #ignore body)

env))))Through the power of fexprs, Kernel needs only three built-in operators (define, vau, and if) as well as some built-in functions. This is vastly simpler than any definition of Scheme! Scheme built-ins such as lambda, set! (!), define-syntax and others fall directly out of fexprs, for free, radically simplifying the implementation. But I'm rambling.In essence, fexprs liberate Lisp's ability to determine the meaning of forms.

It's getting midnight (on a Sunday!), so I can't do fexprs or my excitement about them justice. But I hope this post has made you think about forms and their meanings, and to check out Kernel and share the excitement about the brave new Lisp world of fexprs.

Some history

Reading the interesting Portable and high-level access to the stack with Continuation Marks, I found the following quote about procedure calls (my emphasis):

This [not properly tail-calling] behavior of the SECD machine, and more broadly of many compilers and evaluators, is principally a consequence of the early evolution of computers and computer languages; procedure calls, and particularly recursive procedure calls, were added to many computer languages long after constructs like branches, jumps, and assignment. They were thought to be expensive, inefficient, and esoteric.Guy Steele outlined this history and disrupted the canard of expensive procedure calls, showing how inefficient procedure call mechanisms could be replaced with simple “JUMP” instructions, making the space complexity of recursive procedures equivalent to that of any other looping construct.

Then it gets highly ironic, with a quote by Alan Kay:

Though OOP came from many motivations, two were central. ... [T]he small scale one was to find a more flexible version of assignment, and then to try to eliminate it altogether.

Saturday, July 30, 2011

Some nice paperz on delimited continuations and first-class macros

My current obsessions are delimited continuations (see my intro post) and first-class macros (fexprs). Here are some nice papers related to these topics:

Three Implementation Models for Scheme is the dissertation of Kent Dybvig (of Chez Scheme fame). It contains a very nice abstract machine for (learning about) implementing a Scheme with first-class continuations. I'm currently trying to grok and implement this model, and then extend it to delimited continuations.

A Monadic Framework for Delimited Continuations contains probably the most succinct description of delimited continuations (on page 3), along with a typically hair-raising example of their use.

Adding Delimited and Composable Control to a Production Programming Environment. One of the authors is Matthew Flatt, so you know what to expect - a tour de force. The paper is about how Racket implements delimited control and integrates it with all the other features of Racket (dynamic-wind, exceptions, ...). Apropos, compared to Racket, Common Lisp is a lightweight language.

Fexprs as the basis of Lisp function application or $vau: the ultimate abstraction and the Revised-1 Report on the Kernel Programming Language. These two are probably the two papers I would take to the desert island at the moment. I'm only a long-time apprentice of programming languages, but I know genius when I see it.

Three Implementation Models for Scheme is the dissertation of Kent Dybvig (of Chez Scheme fame). It contains a very nice abstract machine for (learning about) implementing a Scheme with first-class continuations. I'm currently trying to grok and implement this model, and then extend it to delimited continuations.

A Monadic Framework for Delimited Continuations contains probably the most succinct description of delimited continuations (on page 3), along with a typically hair-raising example of their use.

Adding Delimited and Composable Control to a Production Programming Environment. One of the authors is Matthew Flatt, so you know what to expect - a tour de force. The paper is about how Racket implements delimited control and integrates it with all the other features of Racket (dynamic-wind, exceptions, ...). Apropos, compared to Racket, Common Lisp is a lightweight language.

Subcontinuations. This is an early paper on delimited continuations. It also describes control filters, a low level facility on top of which dynamic-wind can be implemented. Control filters are also mentioned - in passing - as a nice tool in the Racket paper (above).

If you know of any related nice papers, let me know!

On Twitter, Paul Snively mentioned Oleg's paper Delimited Control in OCaml, Abstractly and Concretely, but probably only because he's mentioned in the Acknowlegdements - kidding! It's a great paper, and I'm studying it too, but unfortunately it's not really applicable to implementing delimited control in JavaScript, which is what I'm trying to do.

Thursday, July 28, 2011

Everything you always wanted to know about JavaScript...

...but were afraid to ask Brendan Eich. (the whole thread, starting with this post)

Thursday, July 21, 2011

On the absence of EVAL in ClojureScript

[I got bored with the original text of this post. In short: the authors of ClojureScript have taken the pragmatic approach of reusing Clojure-on-the-JVM to generate JavaScript. This means there can be no EVAL in ClojureScript. I think that's sad because Lisp is one of the finest application extension languages, and without EVAL you can't do a really extensible app. Apparently, the ClojureScript authors view the browser-side as something of an adjunct to server apps. In my view, all the action of the future will be in the browser, and not on the server.]

Thursday, July 14, 2011

Frankenprogramming

David Barbour throws down the gauntlet to John Shutt in an already interesting and entertaining ("about as coherent as a 90 day weather forecast") LtU thread:

Previously, regarding fexprs: John Shutt's blog

John Shutt would like to break the chains of semantics, e.g. using fexprs to reach under-the-hood to wrangle and mutate the vital organs of a previously meaningful subprogram. Such a technique is workable, but I believe it leads to monolithic, tightly coupled applications that are not harmonious with nature. I have just now found a portmanteau that properly conveys my opinion of the subject: frankenprogramming.I'm looking forward to John's reply. In the meantime, I have to say that I like to view this in a more relaxed way. As Ehud Lamm said:

Strict abstraction boundaries are too limiting in practice. The good news is that one man's abstraction breaking is another's language feature.

Sunday, July 10, 2011

Atwood's Law

Any application that can be written in JavaScript, will eventually be written in JavaScript.

(source; also: Duke's Law)

Friday, July 8, 2011

it is nice to have the power of all that useful but obsolete software out there

Subject: Re: Switching tasks and context

∂08-Jul-81 0232 Nowicki at PARC-MAXC Re: Switching tasks and context

Date: 6 Jul 1981 10:32 PDT

From: Nowicki at PARC-MAXC

In-reply-to: JWALKER's message of Wednesday, 1 July 1981 11:52-EDT

To: JWALKER at BBNA

cc: WorkS at AI

∂08-Jul-81 0232 Nowicki at PARC-MAXC Re: Switching tasks and context

Date: 6 Jul 1981 10:32 PDT

From: Nowicki at PARC-MAXC

In-reply-to: JWALKER's message of Wednesday, 1 July 1981 11:52-EDT

To: JWALKER at BBNA

cc: WorkS at AI

The important thing is that such a tool WORKS, and I find incredibly useful. The virtual terminal uses ANSI standard escape sequences, so we can use all the tools that have been around for a long time (like the SAIL Display Service, Emacs under TOPS-20 or Unix, etc.) with NO modifications. We are planning to use this to develop a more integrated system, but it is nice to have the power of all that useful but obsolete software out there.

(via Chris, who's scouring Unix history for us)

(via Chris, who's scouring Unix history for us)

Wednesday, June 29, 2011

PL nerds: learn cryptography instead

I've never studied cryptography. So when the paper Tahoe – The Least-Authority Filesystem fell in my lap, I was perplexed.

Using just two primitives, secret-key and public-key cryptography, they build an amazing solution to information storage. (Try to understand the two main diagrams in the paper. It's not hard, and it's amazing.)

Before encountering Tahoe-LAFS, cryptography was just a way to keep stuff secret to me. But cryptography also provides us with tools to design programs that wouldn't be possible otherwise.

Subscribe to:

Posts (Atom)