Oleg's argument against call/cc links to one of his lesser known posts, Delimited continuations do dynamic-wind. In it he first shows how to implement yielding generators in terms of delimited control, and then shows how such generators lead to a natural definition of dynamic-wind, in userland, whereas with undelimited continuations, dynamic-wind has to be primitive.

(This is not entirely true. Kernel can implement dynamic-wind in userland, too, by means of its guarded undelimited continuations. And in Scheme 48, dynamic-wind is also implemented in userland with undelimited continuations, albeit in terms of a slightly lower level API than call/cc. Same is true for other Schemes, such as MIT Scheme. And probably the earliest definition of dynamic-wind, in Embedding Continuations in Procedural Objects is also in userland, so I'm a bit confused actually, as to why Oleg considers dynamic-wind primitive...)

Back to Oleg's generators in terms of delimited control. While one may argue against first-class continuations, whether delimited or not, I think having them in the language is great for quickly prototyping different ideas, such as generators. This has always been a forte of Lisp, and so high-powered control flow operations seem a good fit for the language, even if their runtime cost may be slightly higher than a more specialized, native implementation of some specific control feature, such as generators.

The basic ideas of generators are: you have a block of code, the generator, which may either normally return a value by means of reaching the end of the block, or it may intermittently yield a value. To call a generator, we need a boundary around it, so we wrap it in a prompt with reset. Yielding suspends the generator, capturing its delimited continuation up to the prompt, and delivers the value and this continuation to the caller, outside the boundary. Value and continuation are wrapped up in a special record type, so the caller can distinguish yields from an ordinary return, which doesn't wrap the value in any way (and neither provides a continuation for resuming, obviously). (This could probably be handled more elegantly with Racket's abort handlers.)

In this Gist, I've commented Oleg's implementation of generators and dynamic-wind.

I think it's a great example of how simple it is to add specific control flow features to a language with general (delimited) continuations.

Oh, and in typical Oleg style, the article then goes on about how you can implement call/cc in terms of generators, which makes his dynamic-wind compatible with existing Scheme examples using call/cc.

Showing posts with label scheme. Show all posts

Showing posts with label scheme. Show all posts

Saturday, August 11, 2012

Tuesday, August 7, 2012

Scheme's missing ingredient: first-class type tags

I've always felt that Scheme does data and types wrong, but I couldn't really say what bothered me until yesterday. After all, R7RS now has define-record-type for creating disjoint types. But that ain't enuff!

In my new language, Wat, I've found a satisfying solution, inspired by Kernel's encapsulation types, but going beyond them slightly. There are two procedures:

In my new language, Wat, I've found a satisfying solution, inspired by Kernel's encapsulation types, but going beyond them slightly. There are two procedures:

- (make-type) returns a list of three elements: 1) a first-class type 2) a tagger function for tagging a value with that type, creating a tagged object and 3) an untagger function for extracting the value of tagged objects of that type.

- (type-of obj) returns the first-class type of an object. The crucial point is that type-of not only works for tagged objects of user-defined types created by make-type, but also for all built-in types. E.g. (type-of 12) will return the number first-class type.

This system has the benefits of Kernel's encapsulated types: only someone with access to the tagger function (capability) may create new instances of a type. Only someone with access to the untagger function may access the contents of tagged objects. So object contents are potentially fully encapsulated.

But at the same time, the fact that every object, including built-in ones, has a first-class type makes it possible to efficiently program generically. E.g. one may create Smalltalk-like dynamic dispatch by using the first-class types of objects as indexes into a virtual lookup table of a generic function. This is not possible in either Scheme or Kernel. In both languages, programming generically requires one to use a cascade of type predicates (e.g. number?).

Example in Wat:

Example in Wat:

; destructuringly bind the three elements ; returned by make-type (def (person-type person-tagger person-untagger) (make-type)) (define (make-person name email) (person-tagger (list name email))) ; untagger also performs a type check that ; person is in fact of type person (define (get-name person) (car (person-untagger person))) (define (get-email person) (cadr (person-untagger person))) (define p1 (make-person "Quux" "quux@example.com")) (get-name p1) --> "Quux" (get-email p1) --> "quux@example.com"

Wednesday, August 1, 2012

Understanding metacontinuations for delimited control

Metacontinuations arise in some implementations of delimited control such as in A Monadic Framework for Delimited Continuations.

In addition to the usual continuation register K, an interpreter with metacontinuations has a metacontinuation register MK.

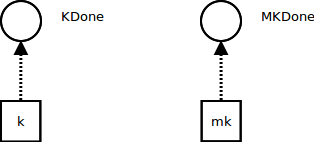

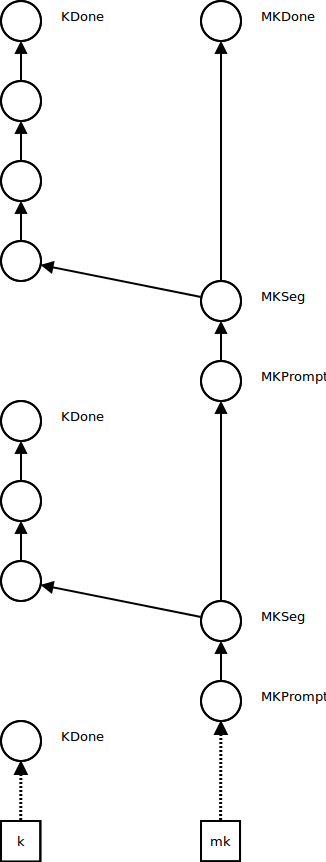

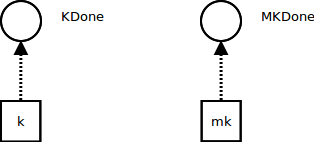

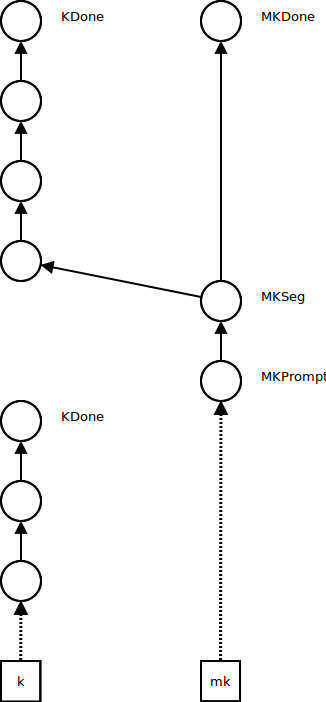

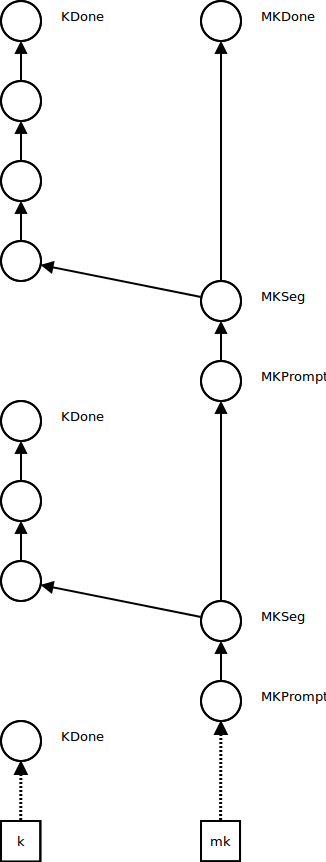

Initially, K points to an empty continuation KDone, and MK points to an empty metacontinuation MKDone:

When the ordinary continuation reaches KDone, we look at the metacontinuation: if it's MKDone, the program is finished and we return its result. Otherwise, we underflow the metacontinuation by reestablishing the next continuation segment as the current continuation and the next metacontinuation as the current metacontinuation.

In addition to the usual continuation register K, an interpreter with metacontinuations has a metacontinuation register MK.

Initially, K points to an empty continuation KDone, and MK points to an empty metacontinuation MKDone:

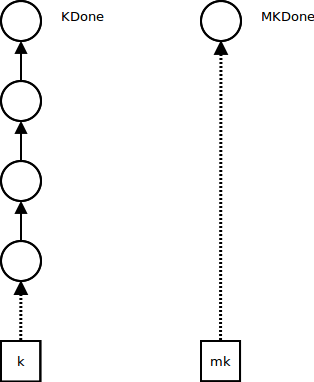

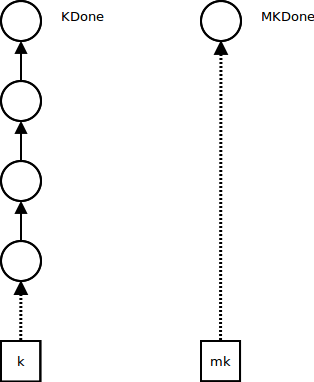

Ordinary computations influence only the continuation K - they push continuation frames while the metacontinuation MK stays the same:

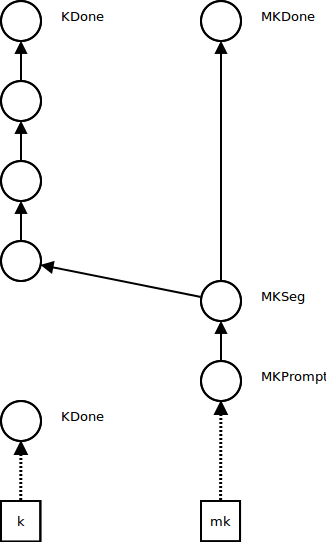

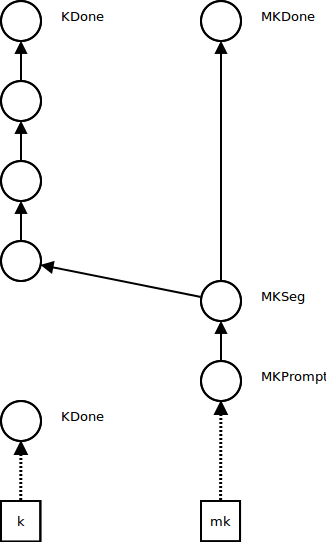

Control operations such as pushing a prompt (continuation delimiter) influence the metacontinuation. A segment metacontinuation frame MKSeg stores the continuation segment at which the control effect occurred and a pointer to the next metacontinuation. A prompt metacontinuation frame MKPrompt remembers the prompt and points to the segment frame (the reason for splitting this operation into two metacontinuation frames is that it makes the code simpler; see the paper). MK now points to the prompt metaframe, and K points to a new empty continuation KDone:

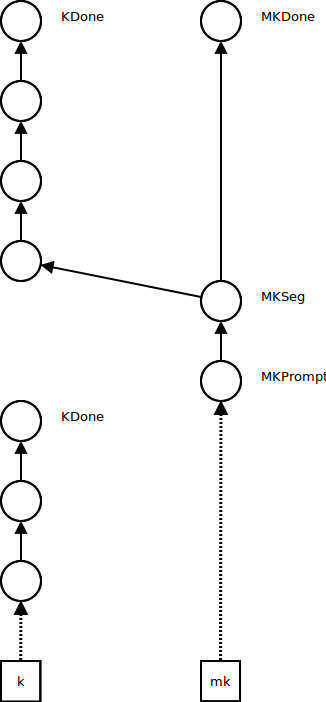

Further non-control computations occur in the new continuation, while the metacontinuation stays the same, as before:

Further control operations push frames onto the metacontinuation, and create new empty continuations, as before:

When the ordinary continuation reaches KDone, we look at the metacontinuation: if it's MKDone, the program is finished and we return its result. Otherwise, we underflow the metacontinuation by reestablishing the next continuation segment as the current continuation and the next metacontinuation as the current metacontinuation.

An alternative API for continuations

Call/cc is the most well-known API for exposing first-class continuations to userland. But there is another API that's used in Scheme 48 and is also described in a paper by Feeley.

(continuation-capture f) --- calls f with the current continuation as argument. In contrast to call/cc, the continuation is not a procedure, but an opaque first-class object. (In Scheme 48, this is called primitive-cwcc.)

(continuation-graft k thunk) --- calls thunk in the continuation k. In contrast to call/cc, k doesn't receive a value computed in another continuation, but rather thunk's effect is performed in k. (Scheme 48: with-continuation.)

The implementation difference to call/cc is minimal, and expressing call/cc in terms of this API is simple:

I can't exactly put my finger on it, but this API seems more powerful than call/cc. In the implementation of delimited dynamic binding in terms of continuation marks I'm working on, there is a need for fetching the marks of another continuation. With call/cc this is impossible, but with this API I can prepend a thunk to the continuation in question, then in this thunk fetch the continuation's marks, and then jump out of it again.

(continuation-capture f) --- calls f with the current continuation as argument. In contrast to call/cc, the continuation is not a procedure, but an opaque first-class object. (In Scheme 48, this is called primitive-cwcc.)

(continuation-graft k thunk) --- calls thunk in the continuation k. In contrast to call/cc, k doesn't receive a value computed in another continuation, but rather thunk's effect is performed in k. (Scheme 48: with-continuation.)

The implementation difference to call/cc is minimal, and expressing call/cc in terms of this API is simple:

(define (call/cc f)

(continuation-capture

(lambda (k)

(f (lambda (val)

(continuation-graft k (lambda () val)))))))

I can't exactly put my finger on it, but this API seems more powerful than call/cc. In the implementation of delimited dynamic binding in terms of continuation marks I'm working on, there is a need for fetching the marks of another continuation. With call/cc this is impossible, but with this API I can prepend a thunk to the continuation in question, then in this thunk fetch the continuation's marks, and then jump out of it again.

Continuation marks

Continuation marks are a mechanism for attaching key-value data to continuation frames. There are two primitives:

(call/cm key value thunk) --- calls thunk with a new continuation mark binding key to value. If the current innermost continuation frame already has a mark with that key, it is overwritten.

(current-marks key) --- returns the list of values of current marks with the given key, from innermost to outermost continuation frame.

Example:

(call/cm 'foo 12 (lambda () (current-marks 'foo))) ---> (12)

So, continuation marks are an obvious mechanism for implementing dynamic binding. That duplicate marks on the same frame overwrite each other makes them play nice with TCO.

I've implemented CMs in the Kernel implementation I'm currently playing with. Every continuation frame has a marks dictionary mapping keys to values. Current-marks walks the continuation chain in the obvious way, collecting matching marks into a list. Call/cm clones the current continuation frame (including the marks dictionary) and updates the binding for the given key. I'm not entirely sure if that's the correct way to implement CMs, but it seems so.

I'm currently trying to implement delimited dynamic binding on top of continuation marks (using the metacontinuation approach from A Monadic Framework), but this seems slightly above my pay grade.

(call/cm key value thunk) --- calls thunk with a new continuation mark binding key to value. If the current innermost continuation frame already has a mark with that key, it is overwritten.

(current-marks key) --- returns the list of values of current marks with the given key, from innermost to outermost continuation frame.

Example:

(call/cm 'foo 12 (lambda () (current-marks 'foo))) ---> (12)

So, continuation marks are an obvious mechanism for implementing dynamic binding. That duplicate marks on the same frame overwrite each other makes them play nice with TCO.

I've implemented CMs in the Kernel implementation I'm currently playing with. Every continuation frame has a marks dictionary mapping keys to values. Current-marks walks the continuation chain in the obvious way, collecting matching marks into a list. Call/cm clones the current continuation frame (including the marks dictionary) and updates the binding for the given key. I'm not entirely sure if that's the correct way to implement CMs, but it seems so.

I'm currently trying to implement delimited dynamic binding on top of continuation marks (using the metacontinuation approach from A Monadic Framework), but this seems slightly above my pay grade.

Thursday, August 18, 2011

A little Scheme interpreter

I've rewritten the Scheme interpreter from Kent Dybvig's dissertation Three Implementation Models for Scheme in JavaScript: Schampignon.

I think this is really a nice way to learn about implementing tail-calls and first-class continuations.

Schampignon is undocumented, because it's really just a rewrite of the Scheme code in section 3.4 of the dissertation, which explains things nicely. (Still, it took me a couple of days to figure out how it works.)

My next steps will be to add delimited continuations and fexprs to the code.

Wednesday, June 16, 2010

The Republic of Perl

A while ago I read everything I could find about Perl 6. After all, they have a lot of experience in large-scale software development.

But, oh the humanity, is this language weird. Just as one example, there's an operator, <=== IIRC, that lets you build something like pipelines of function calls.

Like the previous versions of Perl, stuff like this leads to a language where no one, not even the "designer", can tell exactly what a given expression will actually do, without evaluating it. And that's arguably even worse than a bolted-on object system.

To quote from Apocalypse Now!,

WILLARDBut maybe there's a method behind the madness... If you load your language with all sorts of weird, crazy features that other languages don't have, you're increasing the potential that the language will develop a cult of equally crazy followers.

"They told me that you had gone totally insane and

that your methods were unsound."

KURTZ

"Are my methods unsound?"

WILLARD

"I don't see any method at all, sir."

People will be so brain-damaged by your "innovations", that they will come to depend on them, learn their ways around their uncountable sharp edges, and miss them in other languages. Your language has become a world unto itself, a republic.

Furthermore, I can see how such features create a sense of community. After all, once you've invested significant brainpower in memorizing irrelevant crap, you're exhausted and like to hang around with other people who've shot their feet off, and chat and joke, and proudly call your language a Swiss army chainsaw.

Take Scheme as an anti-example. Scheme was, until R5RS, designed by unanimous vote. Thus Scheme has only generally-approved features, but lacks character. Maybe, that's why the "Scheme community" is often put into scare quotes.

So, if you'd like a cult following for your language, maybe you should go Perl's way of adding whatever crazy idea lurks in the dark recesses of your language-designer's reptile brain.

The horror, the horror.

Tuesday, May 4, 2010

Doing the Right Thing

As I've said before, the great thing about Lisp is it's age. Like a good wine, Lisp has had a long time to age.

I think one of the best examples of the ripeness is R6RS' expansion process. It's a very good specification of a quite complex process, namely the processing of a number of expressions by the compiler.

The complexity stems from the fact that Lisp is at its core a multi-phase language: source code contains runtime values, such as variables and functions, as well as compile-time values, such as macros.

In a Lisp source file, one expression may be for execution at runtime, while the next is for execution at compile-time.

The intermingling of these different phases in a single source file seems to be somewhat of a historical accident, as one day we'll all be programming in a hypercode environment, but well, that's how it is.

Anyway, if you're writing a Lisp, study R6RS' expansion process and enjoy! :)

I think one of the best examples of the ripeness is R6RS' expansion process. It's a very good specification of a quite complex process, namely the processing of a number of expressions by the compiler.

The complexity stems from the fact that Lisp is at its core a multi-phase language: source code contains runtime values, such as variables and functions, as well as compile-time values, such as macros.

In a Lisp source file, one expression may be for execution at runtime, while the next is for execution at compile-time.

The intermingling of these different phases in a single source file seems to be somewhat of a historical accident, as one day we'll all be programming in a hypercode environment, but well, that's how it is.

Anyway, if you're writing a Lisp, study R6RS' expansion process and enjoy! :)

Tuesday, April 20, 2010

Code is (more than) data

In Lisps like Common Lisp, code is data.

(In CL, code is represented as cons lists, which totally sucks, because you can't attach any metadata (such as line number information) to the code.)

One of the insights of the "Scheme community" is that code is more than data. They found this out by investigating hygiene.

In essence, code has some features that aren't easily captured by simply using s-expressions.

Namely, code has lexical structure. Code containing LET for example introduces new bindings, and if you simply render that as lists, you're losing important information.

If you want hygiene (hint: you want it), simply using s-expressions isn't enough. You need syntax objects.

More later.

(In CL, code is represented as cons lists, which totally sucks, because you can't attach any metadata (such as line number information) to the code.)

One of the insights of the "Scheme community" is that code is more than data. They found this out by investigating hygiene.

In essence, code has some features that aren't easily captured by simply using s-expressions.

Namely, code has lexical structure. Code containing LET for example introduces new bindings, and if you simply render that as lists, you're losing important information.

If you want hygiene (hint: you want it), simply using s-expressions isn't enough. You need syntax objects.

More later.

Subscribe to:

Posts (Atom)